Why The Data Platform is The Most Important Internal Tool

I was inspired to write this post while reading Packy McCormick's Not Boring article on Rox, a new investment of his. Here is my case for why the data platform is the most important internal tool.

I was inspired to write this post while reading Packy McCormick's Not Boring article on Rox, his new investment. In the article, he mentioned a few stats that stuck out to me. , I was interested in a few stats he mentioned:

The underlying data that drives Salesforce left Salesforce five years back,” Ishan explained. It moved to the data warehouse, provided by companies like Snowflake. In fact, 40% of all data in data warehouses is customer data.”

I didn’t realize the amount of customer data was so high. Additionally, the statistic that 90% of 300 companies have built internal CRM tools makes a lot of sense. The first project a lot of data teams have is financial reporting, which creates more insight into sales and CRM. Use Fivetran and dump all 800 of those Salesforce tables into Snowflake to get an accurate picture of what is truly going on in your business. The question I then ask myself is, does this mean the Modern Data Stack won? I thought it was dead. While it was a marketing term which inspired a ‘movement,’ a great test to determine if it is valuable is if the system of record is moving to the data warehouse. This shift makes the case that the data platform will be the most critical tool for companies to win in AI.

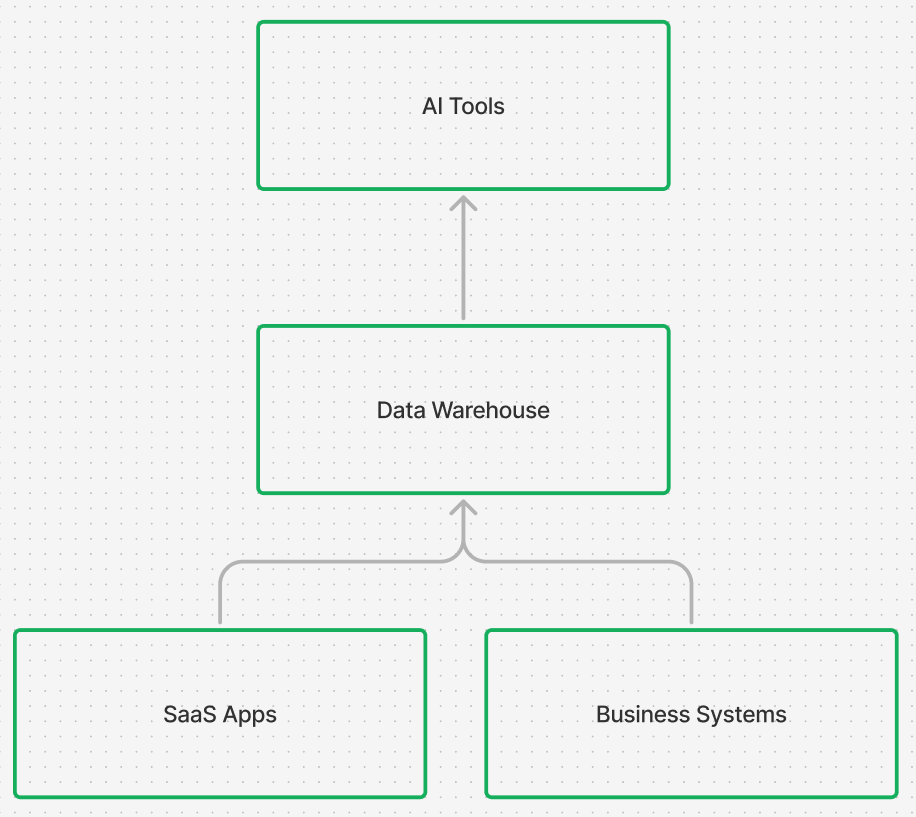

As teams go to build AI products and internal tools, they want and need a centralized data source. The glorified one source of truth that we are all still trying to find. The issue with most AI tools that we purchase today is that they are siloed. They can only automate or increase value on the small point solution workflow they own. This is why SaaS will struggle; it fought hard to get your data, but with only a small sliver of the workflow, that data can only help so much. A Hubspot copilot is locked to Hubspot, a dbt copilot is locked to dbt and a text-to-SQL tool in Snowflake is locked to that ecosystem. With every data platform wanting to own the entire stack, those tools will stay that way.

Where does this lead us? It means that teams need to centralize their tools into the warehouse. The incentive to build a well-governed, holistic data platform to leverage AI has never been higher. Centralize, clean, and use all that data to empower the tools and people to move mountains. This is where data teams can bring huge ROI to businesses. This is what makes the data platform the most important internal tool. Those who can deliver on this vision will have a competitive advantage. Mixing external data with clean and trustworthy internal datasets will create billions of dollars in opportunities for teams.

This is also true for the tools across your data stack, which are fragmented. A large tailwind we see with Artemis is that data teams are hungry for tools that work across the stack. With Artemis, you centralize the metadata and error logs siloed across individual tools and combine them into one tool to automate workflows across the stack. We don’t look at your tools in isolation. We take a platform approach.

When teams were built with the MDS, no guard rails were built. The amount of bloat is staggering. We speak with teams with 500+ data models; in reality, they only need 60. The average team we speak with spends 1.5- 3 times their warehouse budget. This bloat not only makes platforms more expensive but also brings complexity. This complexity leads to teams not understanding what their data is doing and how it impacts the organization. It means no one trusts the data. Our motto is simple is best. The less you can do, the better. Clearing out the junk and getting data platforms in shape should be step 1 in using AI.