The Natural Evolution of Data Platforms

Why do data platforms always get bloated and chaotic at scale? Are processes and tools the right solution to fix this problem?

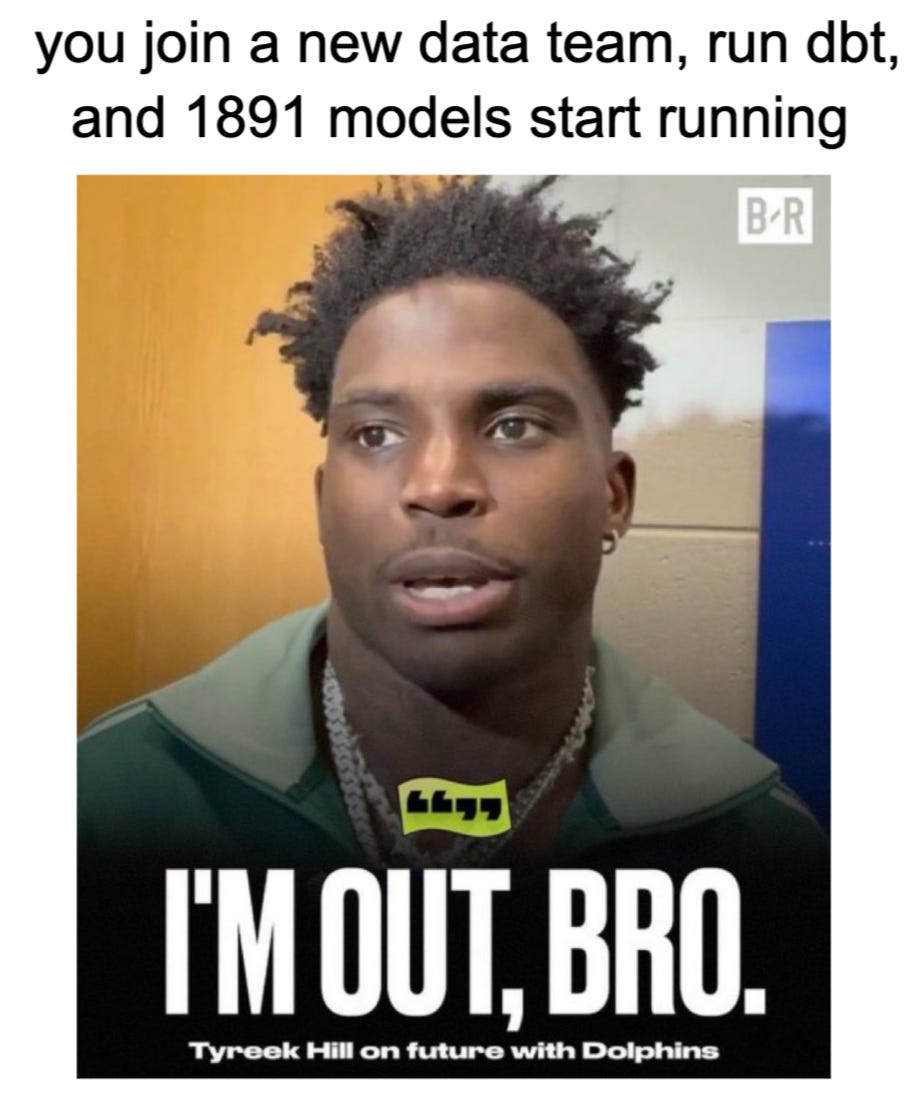

A familiar picture is drawn whenever I talk with data engineers or managers. They talk about how they have multiple BI tools, years of accumulated dbt models, and warehouse costs steadily climbing to reflect the bloat. What strikes me is how this happens so predictably across organizations. As data teams grow and evolve, they often end up with:

Multiple BI tools serving different purposes.

Years of dbt models written by different team members, often solving similar problems differently.

A steady stream of ad-hoc queries and analysis, some of which could be consolidated into proper data models.

Significant compute costs which are not necessary

I posted about this about a month ago, and the typical response was that this could be solved with the proper foundation and process.

While there is some truth in those statements, why does this still happen to almost every team that has scaled a data platform? How do data platforms become so messy in the first place?

After thinking about this for a while, the answer, while annoyingly so, could be that it is human nature. Looking beyond data engineering to other fields like software development and project management—or even our own homes—we see how clutter, mess, and disorganization naturally accumulate over time.

A large portion is because it takes focus and energy to stay on top of the mess. It’s also not fun work. It is not fun taking the trash out, just as it’s not fun combing through a model someone else wrote to find bugs and fixes. The benefit (and curse) is unlike in real life; the trash can continue to pile up on our data platforms without many people noticing.

As people, we almost always take the path of least resistance. We don’t finish the documentation because it's annoying. We don’t spend the time to see if a model already accomplishes what we need, so instead, we build a new one. This message has also been broadcast across the entire modern data stack. The answer for the longest time has been to add. Add another model, buy another solution, hire more analytics engineers.

So, sure, having a good model foundation, principles, and processes helps reduce or alleviate the chaos within a data platform. However, tech debt and bloat are going to happen.

Now that we know this, how can data teams stay on top of these issues and reduce them over time? This is a question a lot of data teams are asking themselves. From the teams we have spoken to, there are a few outcomes.

You can do nothing and hope it’ll figure itself out one day. (Spoiler: it won’t).

You commission a team of 1 or 2 engineers to focus on this project. They spend 6-9 months on it and are pulled from other projects.

They do the opposite and commission 1-2 to focus on new work while the rest of the team is set on fixing their foundation. This is a costly outcome.

They use Artemis.

Teams need a maniacal focus on maintaining a simple yet effective data platform. They must be diligent in their work to ensure the platform scales appropriately. This is the hard part of the modern data stack, and with the new trends around cost cutting, budget realignment, and vendors being cu, this complexity issue is brought to the forefront.

However, the issue for many teams is that they are being asked to trim a platform they did not build themselves while fulfilling all the work they had to do regardless. This lack of context makes fixing issues and cutting tech debt extremely challenging. You need either a person to do this or a tool to monitor your platform and surface issues and resolve them.

The reality is that this isn't about disorganization—it's about the natural evolution of data teams and platforms. As teams scale, "accidental complexity" appears, where solving immediate business needs gradually creates a more complicated system than anyone intended.

If your platform is bloated, reach out!

About Artemis

Artemis monitors your data stack, finds issues, and automatically resolves them. Our users approve over 120 insights, merge 60+ PRs, and save over 20 hours a week. There is no need to migrate; our platform integrates with your data stack within 15 minutes!